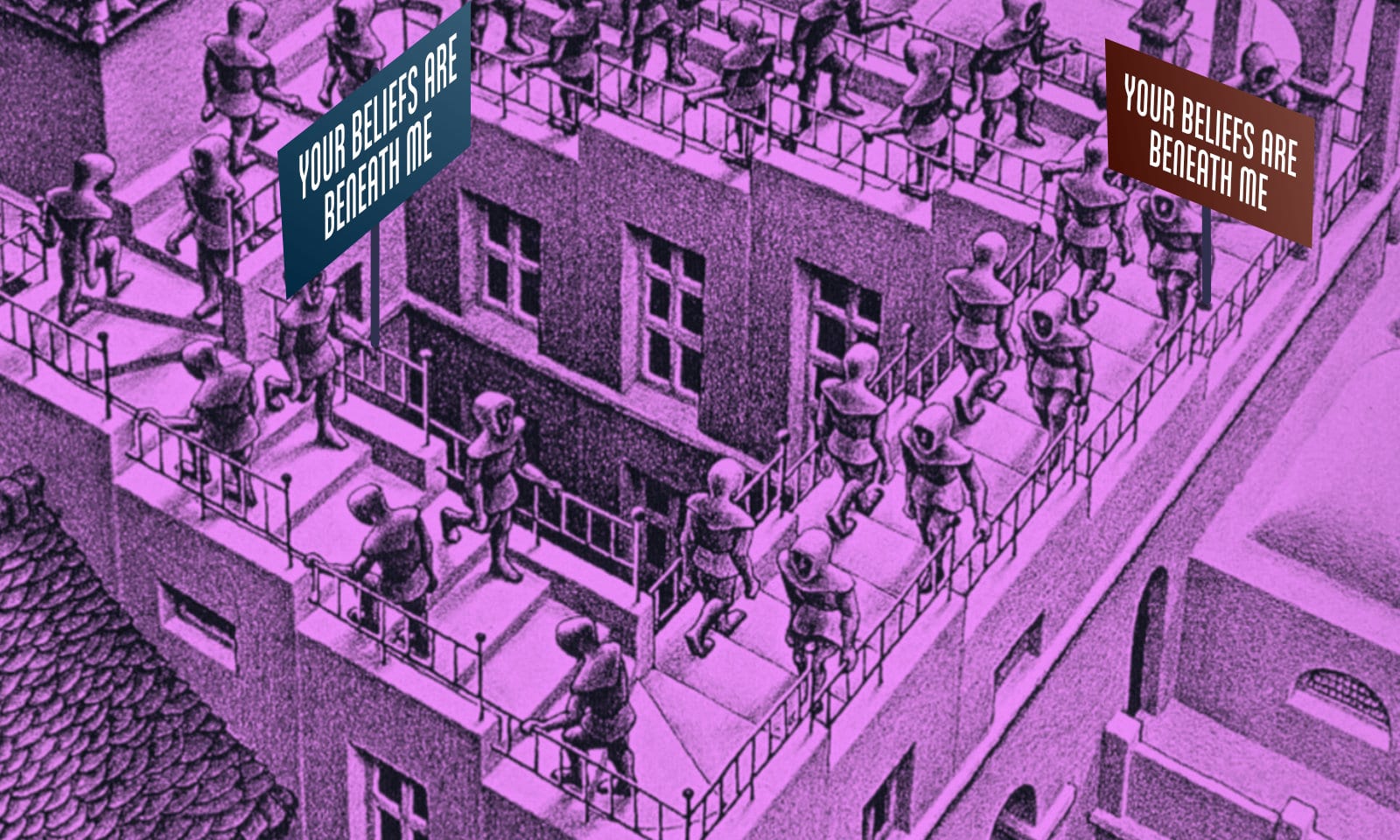

In being critical of the bizarre beliefs of others, we must be careful to examine our own beliefs, some of which may be just as crazy.

It’s virtually impossible to miss the weird, unfounded beliefs that are out there. Especially troublesome is to see one of our colleagues, whom we otherwise admire for their intellect, succumbing to one of them. But if this phenomenon is so widespread amongst others, could it be possible that you have some howlers amongst your own beliefs? As painful as it is to admit this, it is clear that you must. You are not, after all, infallible. Unfortunately, detecting our own false beliefs is much harder than detecting those of others. There are two reasons for this difficulty which stem from the nature of belief itself. I think that by examining these reasons I will be able to shed some light on the tools employed in critical thinking and scientific reasoning that allow us to identify our own false beliefs and admit when we are wrong. But first, a couple of issues I want to get out of the way.

First, there are many reasons given for our disinclination to admit false beliefs: our reluctance to look bad in front of others by having to admit we were wrong, our desire to win a debate, our loyalty to ‘our side’ when we are defending a belief central to the groups with which we identify, such as our family, political party, or place of employment, or simply to avoid the embarrassment of being caught in a mistake. However, I’m not concerned here with our failure to admit that one of our beliefs is false, because this might be a case of simply lying as opposed to failure to recognise the belief as false – and lying is too common to be interesting. It is just that we convince ourselves that the reasons of the sort listed above are reasons which carry more weight than speaking the truth. What I shall deal with here are the cases where people actually believe weird things, not just that they profess them.

Second, it is crucial that we characterise detecting our own false beliefs as difficult, rather than impossible. The reason is that this distinction marks the difference between on the one hand recognising that a task requires a great deal of effort, attention, and the acquisition of skills, and, on the other hand, the flat-out admission of defeat and surrender to the inevitable. In fact, if I may be permitted a brief excursion into the infighting amongst philosophers, it represents the distinction between the handwringing, despair and ranting against reason which constitute postmodernism, existentialism, phenomenology, neo-Marxism and Critical Race Theory, and the rest of philosophy. In short, it is the distinction between those who think that critical thinking is possible, and those who don’t.

… detecting our own false beliefs is much harder than detecting those of others.

With these caveats out of the way, we can turn to the reasons central to belief that make it easier to catch the false beliefs of others than to catch our own. First, to believe something is to take that something to be the case. But as soon as you find sufficient reason to think that something is not the case, you don’t believe it anymore. So, you cannot look over your beliefs and identify any of them as false. This doesn’t hold for your analyses of others’ beliefs: There is nothing similarly paradoxical for you to maintain that I believe something which is not the case. This problem, by the way, has come to be known as Moore’s Paradox, after the first person to articulate it, the English turn-of-the-last-century philosopher, G.E. Moore.((There is a whole host of philosophical papers on the subject, but G.E. Moore first introduced it in “Russell’s ‘Theory of Descriptions’”, in Philosophical Papers, pp. 149—192, New York, Collier Books, 1966.))

This is not really a serious problem; it merely guarantees that your identifying a belief as false automatically results in your ceasing to believe it. However, it does explain why we are prone to commit the fallacy of Confirmation Bias. This is the fallacy of only looking at evidence which confirms a belief, not that which casts doubt on it. We take our belief to be true, so why wouldn’t the evidence back it up? So how do we avoid this natural tendency to reinforce our current beliefs, even the false ones? We need to focus on the evidence, rather than on our beliefs. I’ll say more about this in a moment; but first the second difficulty.

The second difficulty has to do with the way we form our beliefs in the first place, and how we justify them. Very few of our beliefs are original; almost all of them are derived from what we read or hear from others. Yes, some of our beliefs arise from our own reasoning, when we derive our own original beliefs by reasoning from other beliefs, but even these have their genesis in the beliefs of others. “You can’t make bricks without straw,” as the old saying goes. Logicians have a similar one, in which they substitute “infer” for ‘make”, “conclusions” for “bricks,” and “premisses” for “straw”. And, somewhere down the line, almost all of those premisses will be derived from the beliefs of others. Very few will come from our own original observations. So, it is no wonder that we spend more time analysing others’ beliefs than we do our own. Every time we adopt a new belief, we are engaging in a process of examining the evidence for the claim we are asked to believe to decide whether it is sufficient to take it to be true. Granted, we should be taking more care in the adoption of new beliefs. But we need beliefs in order to act, and sometimes we need to act quickly. The result is that we behave like a moron in a hurry,((I wish I could claim to have invented this phrase, but the honour goes to Mr Justice Foster of the English High Court. He coined it in the 1978 case Morning Star Cooperative Society v Express Newspapers Limited. The British Communist Party, which already had their official newspaper, the Morning Star, had sought an injunction against Express Newspapers, who wished to start a new tabloid to be called the Daily Star. He ruled against the Communists. In the reason for his judgment he said, “If one puts the two papers side by side I for myself would find that the two papers are so different in every way that only a moron in a hurry would be misled.”)) and adopt a belief without paying enough attention to the quality of the evidence in favour of it. So that’s why we have false beliefs and we don’t notice them: we aren’t paying enough attention.

That’s why we need mechanisms to re-examine our beliefs, to check to see whether we have adopted any false beliefs. This re-examination process is just what the study of liberal arts in a university or college is designed to foster, and there are numerous resources to be found on the internet and the local library to reinforce and teach these skills.((As far as I can determine, the first person to state this idea was Jonathan Swift: “Reasoning will never make a Man correct an ill Opinion, which by Reasoning he never acquired.” (A Letter to a Young Gentleman, Lately Enter’d Into Holy Orders by a Person of Quality, Second Edition, Printed for J. Roberts at the Oxford Arms in Warwick Lane, London, 1721)) The development of critical thinking and research skills will minimise – though not eliminate entirely – our false beliefs. All we need to do is to remind ourselves to use these skills periodically. One of the best ways to practice these skills is in discussions with others – that is, after all, one of the primary ways we pick up information. However, there is a widely held belief that is as damaging to our ability to cull out false beliefs through discussion and reflection as postmodernism or any of the –isms I mentioned earlier. That belief is captured in some variant of the expression, You can’t reason a person out of a belief he didn’t use reason to form in the first place.((The best I can recommend is the text I used for years in my Critical Thinking classes, Trudy Govier’s A Practical Study of Argument, Enhanced Edition 7th Edition, Belmont, CA, Wadsworth Publishing Co., 2013)) What? Can’t? No one ever changed their mind by reasoning through a false claim they arrived at by irrational means? And they were never helped in this process by rational discussion with others? One of the first lessons of critical thinking is to ask for evidence before accepting such absolute claims. In fact, in my 40-plus year career of debunking weird beliefs, I encountered lots of evidence counter to this idea.

My brother Barry and I ended up on the Rolodexes of most media people in British Columbia as the ‘go-to’ people on cults, thanks to our writings, and roles as spokespeople (along with Lee Moller and Gary Bauslaugh who handled other topics) for the B.C. Skeptics, an organisation promoting scientific literacy and opposing belief in the paranormal and conspiracy theories. Because of this, we were approached a few times by relatives of people embedded in some cult or other to talk with those cult members. Of course the relatives hoped that we could ‘talk them out of’ the cult; but we approached these meetings with much more modest goals. For one thing, we were even more critical of the ‘deprogrammers’ than we were of the cults current at that time. The most extreme of the former engaged in kidnapping, forcible detention, hour after hour of haranguing, and other unacceptable practises. We opposed these not only on civil libertarian grounds, but because they simply don’t work. And how could it? The use of a method of irrational belief formation on someone to get that person to form rational beliefs is simply absurd. In any event, a few cult members did agree to meet with us (one, I vividly recall, met with me in the presence of another more senior cult member). We confined our discussions to the areas in which we had some expertise: the claims of the cult that we could demonstrate to be false, and how the methods of the cult would make it difficult for them to see why these claims were false. But we met with the same success rate as the deprogrammers, if you count as ‘success’ the extraction of that person from the cult. I like to think that in at least some of conversations we had with these cult members were of some use in their later decisions to abandon the cult; but we never had any of them approach us to let us know of the fact.

What was more important, however, was that we met a few people in the course of our debunking careers who had extracted themselves from various cults prior to meeting us. We encountered ‘survivors’ of Scientology, the Kabalarian Philosophy (a Vancouver home-grown cult) Sai Baba, the Moonies and the Jehovah Witnesses.((Readers may dispute the characterization of the last group as a cult (and maybe some of the others). But my answer is that cults admit of degree, mainly on the degree to which they restrict members from information that goes against the ideology of the cult. Most cult members live in the world, not in insulated communities – and even the Moonies, the only one on the list which actually practiced communal living, sent their members out regularly to proselytize to outsiders. The main mechanism they use is the fallacy of Poisoning the Well: teaching their members to reject out of hand such information as coming from the Devil, or at least from people having a reason to corrupt them with claims that, though they may appear right on the surface, contain deep falsehoods)) Now, the stories of these brave and intelligent people would amount to nothing but a string of anecdotes – which people with a familiarity with statistics know to be suspicious of — but for two reasons. First, each of them constitutes a counterexample to the claim that it is impossible to reason a person out of a belief they didn’t adopt using reason. These people were either born into the cult in question, or were lured into it when they were desperate for answers, and were thus paradigms of people adopting a belief without using reason. All it takes is one counterexample to refute an impossibility claim – one counterexample shows that what is claimed to be impossible is possible after all. Second, and more important, is that all of the people we talked to report the same basic path towards their rejection of falsehoods which they had formerly beloved believed in so fervently. Their odyssey began with noticing a contradiction between the behaviour of people in positions of authority in the cult and the professed beliefs of the cult. In one case, it was the behaviour of the leader. In a some of the cases, the hypocrisy involved behaviour of a sexual nature, and our informants knew of it through a close friend or relative who suffered from it. In one case our informant went through harassment herself, as well as being aware of a relative who suffered. (Readers familiar with cults will know that the leaders of every cult mentioned above except for the Jehovah’s Witnesses have been accused of such behaviour. Our informants, however, except in one case, were not close enough to the leader to be directly aware of this.) Once this jarring inconsistency was noted, they began to notice other inconsistencies, to the point where the person’s entire system of belief unravelled. Since that system was anchored by the doctrines of the cult, the anchor dropped free of the rope, leaving the person free of the cult. You might gather from my description that our informants’ experience was one which ended in an Aha! Moment of triumph. But their experience was far from this. It was long, drawn out, and filled with anxiety and uncertainty. But, central to my point, is that their reason was the driving force in the process – the detection of inconsistency is the primary function of reason.

Now, it might be replied that our informants are not counterexamples at all, for they reasoned themselves out of their beliefs; they weren’t reasoned out of their beliefs by others. Well, yes and no. Yes, in the sense that no one directly assisted them in reasoning out of the belief, in the course of one or two conversations – in fact, they did so despite the obstacles the cult placed in their path. But no, for the reason given above: their discovery of an inconsistency involved them weighing several beliefs against each other, and at least some of those beliefs were derived from other people. So others, perhaps inadvertently, assisted them in reasoning out of those false beliefs.

What is the grain of truth in Swift’s quote (there always is some if the saying survives that long, even if it’s just a grain)? It’s that you won’t change people’s false beliefs immediately by getting in their faces and giving them the truth. That is the case, whether they arrived at the questionable belief through irrational means or by picking up misinformation and applying it rationally. But through systematic rational discussion you may change their minds over time. And you may even catch your own false beliefs if you pay attention to the quality of the evidence for them.![]()